St. Jude Family of Websites

Explore our cutting edge research, world-class patient care, career opportunities and more.

St. Jude Children's Research Hospital Home

- Fundraising

St. Jude Family of Websites

Explore our cutting edge research, world-class patient care, career opportunities and more.

St. Jude Children's Research Hospital Home

- Fundraising

Researchers develop method to dramatically reduce error rate in next-generation sequencing

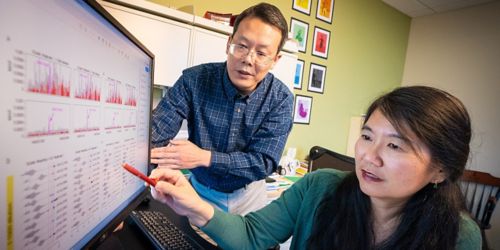

Co-corresponding authors Xiaotu Ma, PhD (left), Computational Biology, and Jinghui Zhang, PhD, Computational Biology chair, illustrate the decreased error rate using CleanDepSeq.

We originally sought out a simple solution – find a way to shrink the error rate in next-generation sequencing data. What we developed has done so by as much as 100-fold, which would likely speed early detection of cancer relapse and other threats. Our findings appeared recently in the journal Genome Biology.

We analyzed next-generation DNA sequencing datasets from St. Jude and four other institutions to identify and suppress common sources of sequencing errors. Using proper modeling of the sequencing errors and other techniques, the error rate for DNA base substation declined from 0.1 percent (1 in 1,000) to between 0.01 (1 in 10,000) and 0.001% (1 in 100,000).

By making it easier to distinguish with greater accuracy the signal from noises, in this case a true mutation from a sequencing error, we hope to give patients a headstart on cures.

Next-Generation sequencing takes away the hay

Early detection of cancer or cancer relapse really is like finding a needle in a haystack, because the number of cancer cells is overwhelmed by the number of normal cells at early stage. This method, which we named CleanDeepSeq, helps eliminate the hay to make it easier to find the needle.

Interest in reducing errors and improving data quality has grown as next-generation sequencing costs have fallen and massively parallel processing means cancer-driving genes can now be sequenced from thousands or hundreds of thousands of cells to find clues of cancer cells long before the overt disease.

Sequencing errors are a roadblock to detecting the low-frequency genetic variants that are important for cancer molecular diagnosis, treatment and surveillance using deep next-generation sequencing. This study provides the first comprehensive analysis of the source of such sequencing errors and offers new strategies for improving the accuracy.

Limiting next-generation sequencing errors

This study focused on identifying the variety and source of substitution errors in next-generation sequencing data and creating a mathematical error suppression strategy. We analyzed datasets from St. Jude, HudsonAlpha Institute of Biotechnology, the Broad Institute, Baylor College of Medicine and WuXiNextCODE, China.

The analysis revealed several sources of errors, including handling and storage of the patient samples, the enzymes used to amplify patient samples and the sequencing itself. The profiling led us to home in on recognition and suppression of errors related to poor sequencing quality or ambiguity in aligning the patient genome with a reference genome.

We’re now working to bring CleanDeepSeq to the clinic. This method might also help scientists studying infectious diseases like influenza and HIV or wherever drug-resistance is a concern.